Search the Community

Showing results for tags 'intelligence'.

-

I decided to create a separate thread for AI. Things are really rapidly changing. AI to replace sales staff that are in a revolving door situation. Seems like this company has hit upon a niche that larger companies are very interested in. Not all of these ideas will work out. But you can bet a significant portion of desk/admin jobs will slowly be redundant over the next 10-15 years. Maybe by then, some of us would be working alongside AIs to do some of the routine tasks. https://techcrunch.com/2017/04/09/saleswhale-seed-funding/

- 498 replies

-

- 2

-

-

- artificial intelligence

- singapore

-

(and 3 more)

Tagged with:

-

https://sg.style.yahoo.com/quit-teaching-because-chatgpt-173713528.html I Quit Teaching Because of ChatGPT This fall is the first in nearly 20 years that I am not returning to the classroom. For most of my career, I taught writing, literature, and language, primarily to university students. I quit, in large part, because of large language models (LLMs) like ChatGPT. Virtually all experienced scholars know that writing, as historian Lynn Hunt has argued, is “not the transcription of thoughts already consciously present in [the writer’s] mind.” Rather, writing is a process closely tied to thinking. In graduate school, I spent months trying to fit pieces of my dissertation together in my mind and eventually found I could solve the puzzle only through writing. Writing is hard work. It is sometimes frightening. With the easy temptation of AI, many—possibly most—of my students were no longer willing to push through discomfort. In my most recent job, I taught academic writing to doctoral students at a technical college. My graduate students, many of whom were computer scientists, understood the mechanisms of generative AI better than I do. They recognized LLMs as unreliable research tools that hallucinate and invent citations. They acknowledged the environmental impact and ethical problems of the technology. They knew that models are trained on existing data and therefore cannot produce novel research. However, that knowledge did not stop my students from relying heavily on generative AI. Several students admitted to drafting their research in note form and asking ChatGPT to write their articles. As an experienced teacher, I am familiar with pedagogical best practices. I scaffolded assignments. I researched ways to incorporate generative AI in my lesson plans, and I designed activities to draw attention to its limitations. I reminded students that ChatGPT may alter the meaning of a text when prompted to revise, that it can yield biased and inaccurate information, that it does not generate stylistically strong writing and, for those grade-oriented students, that it does not result in A-level work. It did not matter. The students still used it. In one activity, my students drafted a paragraph in class, fed their work to ChatGPT with a revision prompt, and then compared the output with their original writing. However, these types of comparative analyses failed because most of my students were not developed enough as writers to analyze the subtleties of meaning or evaluate style. “It makes my writing look fancy,” one PhD student protested when I pointed to weaknesses in AI-revised text. My students also relied heavily on AI-powered paraphrasing tools such as Quillbot. Paraphrasing well, like drafting original research, is a process of deepening understanding. Recent high-profile examples of “duplicative language” are a reminder that paraphrasing is hard work. It is not surprising, then, that many students are tempted by AI-powered paraphrasing tools. These technologies, however, often result in inconsistent writing style, do not always help students avoid plagiarism, and allow the writer to gloss over understanding. Online paraphrasing tools are useful only when students have already developed a deep knowledge of the craft of writing. Students who outsource their writing to AI lose an opportunity to think more deeply about their research. In a recent article on art and generative AI, author Ted Chiang put it this way: “Using ChatGPT to complete assignments is like bringing a forklift into the weight room; you will never improve your cognitive fitness that way.” Chiang also notes that the hundreds of small choices we make as writers are just as important as the initial conception. Chiang is a writer of fiction, but the logic applies equally to scholarly writing. Decisions regarding syntax, vocabulary, and other elements of style imbue a text with meaning nearly as much as the underlying research. Generative AI is, in some ways, a democratizing tool. Many of my students were non-native speakers of English. Their writing frequently contained grammatical errors. Generative AI is effective at correcting grammar. However, the technology often changes vocabulary and alters meaning even when the only prompt is “fix the grammar.” My students lacked the skills to identify and correct subtle shifts in meaning. I could not convince them of the need for stylistic consistency or the need to develop voices as research writers. The problem was not recognizing AI-generated or AI-revised text. At the start of every semester, I had students write in class. With that baseline sample as a point of comparison, it was easy for me to distinguish between my students’ writing and text generated by ChatGPT. I am also familiar with AI detectors, which purport to indicate whether something has been generated by AI. These detectors, however, are faulty. AI-assisted writing is easy to identify but hard to prove. As a result, I found myself spending many hours grading writing that I knew was generated by AI. I noted where arguments were unsound. I pointed to weaknesses such as stylistic quirks that I knew to be common to ChatGPT (I noticed a sudden surge of phrases such as “delves into”). That is, I found myself spending more time giving feedback to AI than to my students. So I quit. The best educators will adapt to AI. In some ways, the changes will be positive. Teachers must move away from mechanical activities or assigning simple summaries. They will find ways to encourage students to think critically and learn that writing is a way of generating ideas, revealing contradictions, and clarifying methodologies. However, those lessons require that students be willing to sit with the temporary discomfort of not knowing. Students must learn to move forward with faith in their own cognitive abilities as they write and revise their way into clarity. With few exceptions, my students were not willing to enter those uncomfortable spaces or remain there long enough to discover the revelatory power of writing.

-

https://vulcanpost.com/843379/team-of-ai-bots-develops-software-in-7-minutes-instead-of-4-weeks/ Back in July, a team of researchers proved that ChatGPT is able to design a simple, producible microchip from scratch in under 100 minutes, following human instructions provided in plain English. Last month, another group — working at universities in China and the US — decided to take a step further and cut the humans out of the creative process almost completely. Instead of relying on a single chatbot providing answers to questions asked by a human, they created a team of ChatGPT 3.5-powered bots, each assuming a different role in a software agency: CEO, CTO, CPO, programmer, code reviewer, code tester, and graphics designer. Each one was briefed about its role and provided details about their behaviour and requirements for communication with other participants, e.g. “designated task and roles, communication protocols, termination criteria, and constraints.” Other than that, however, ChatDev’s — as the company was named — artificial intelligence (AI) team would have to come up with its own solutions, decide which languages to use, design the interface, test the output, and provide corrections if needed. Once ready, the researchers then fed their virtual team with specific software development tasks and measured how it would perform both on accuracy and time required to complete each of them. The dream CEO The bots were to follow an established waterfall development model, with tasks broken up between designing, coding, testing, and documenting of work done, with each of them assigned their roles throughout the process. What I found particularly interesting is the exclusion of CEO from the technical aspects of the process. His role is to provide the initial input and return for the summary, while leaving techies and designers to do their jobs in peace — quite unlike in the real world! I think many people would welcome our new overlords, who are instructed not to interfere with the job until it’s really time for them to. Just think how many conflicts could be avoided! Once the entire team was ready to go, the researchers then fed their virtual team with specific software development tasks and measured how it would perform both on accuracy and time required to complete each of them. Here’s an example of fully artificial conversation between all of the “members”: Later, followed by i.a. this exchange between the CTO and the programmer: These conversations continued at each stage before its completion and information being passed for interface design, testing, and documentation (like creating a user manual). Time is money After running 70 different tasks through this virtual AI software dev company, over 86 per cent of the produced code was executed flawlessly. The remaining about 14 per cent faced hiccups due to broken external dependencies and limitations of ChatGPT’s API — so, it was not a flaw of the methodology itself. The longest time it took to complete a single task was measured at 1030 seconds, so a little over 17 minutes — with an average of just six minutes and 49 seconds across all tasks. This, perhaps, is not all that telling yet. After all, there are many tasks, big and small, in software development, so the researchers put their findings in context: “On average, the development of small-sized software and interfaces using CHATDEV took 409.84 seconds, less than seven minutes. In comparison, traditional custom software development cycles, even within agile software development methods, typically require two to four weeks, or even several months per cycle.” At the very least, then, this approach could shave off weeks of typical development time — and we are only at the very beginning of the revolution, with still not very sophisticated AI bots (and this wasn’t even the latest version of ChatGPT). And if time wasn’t enough of a saving, the basic costs of running each cycle with AI is just… $1. A dollar. Even if we factor in the necessary setup and input information provided by humans, this approach still provides an opportunity for massive savings. Goodbye programmers? Perhaps soon, but not yet. Even the authors of the paper admit that even though the output produced by the bots was most often functional, it wasn’t always exactly what was expected (though it happens to humans too — just think of all the times you did exactly what the client asked and they were still furious). They also recognised that AI itself may exhibit certain biases, and different settings it was deployed with were able to dramatically change output, in extreme cases rendering it unusable. In other words, setting the bots up correctly is a prerequisite to success. At least today. So, for the time being, I think we’re going to see a rapid rise in human-AI cooperation rather than outright replacement. However, it’s also difficult to escape the impression that through it we will be raising our successors and, in not so distant future, humans will be limited to only setting goals for AI to accomplish, while mastering programming languages will be akin to learning Latin.

- 20 replies

-

- 3

-

-

-

- programmers

- ia bots

- (and 9 more)

-

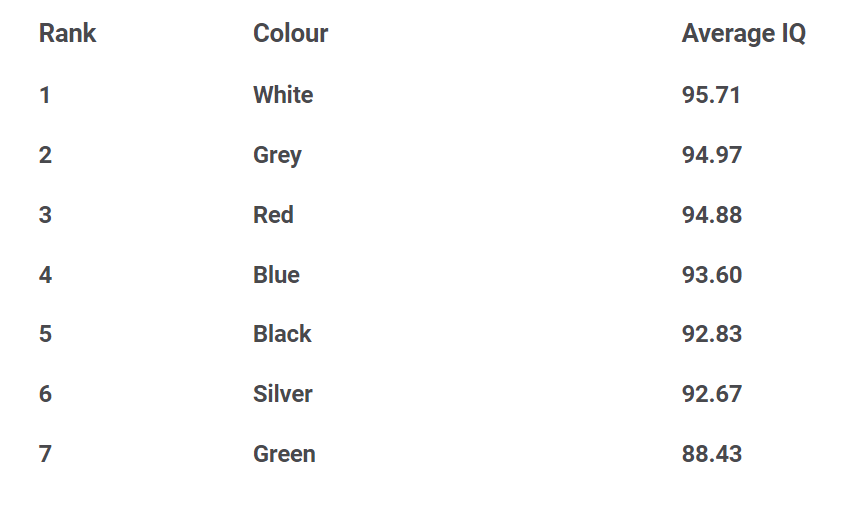

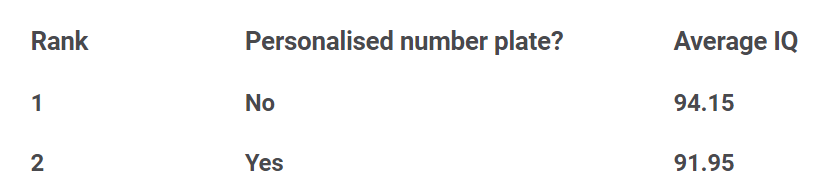

Which Drivers are the Smartest? https://www.scrapcarcomparison.co.uk/blog/smartest-drivers/ Last Updated 16th January 2023 Many generalisations can be made about you as a driver, with people stereotyping your driving style based on your age, gender and job role – we even found in previous research that some types of drivers are more likely to possess psychopathic tendencies than others. The insult of a “stupid driver” may be thrown around by motorists in a fit of road rage – but can the type of car you drive actually correlate with how intelligent you are? We wanted to find out, so we tasked over 2,000 drivers with taking an IQ test – the scores of which indicate how clever participants are. An IQ (intelligence quotient) is a score based on participants’ performance on a set of standardised tests designed to assess intelligence. According to studies, approximately two thirds of the general population will have an IQ score of between 85 and 115, while only 2.5% will score below 70 or above 130. For those scoring more than 130, a score of over 144 means you are ‘very gifted’ or ‘highly advanced’ – essentially, a genius – according to the Stanford-Binet Intelligence Scale. From the results of our study, we’ve calculated average IQ scores for different groups of drivers, categorised by the brand, colour and customisation of the car you drive. Depending on what you drive, the results might shock you. Read on to see where your motor ranks! Skoda drivers are the most intelligent Our research showed that Skoda drivers were the most intelligent of the 22 car brands included in the study, with an average IQ score of 99. Interestingly, our previous research found that Skoda drivers were the drivers with the least psychopathic tendencies too, perhaps demonstrating that Skoda drivers may be calmer on the roads. Suzuki (98.09), Peugeot (98.09), Mini (97.79) and Mazda (95.91) drivers followed to make up the top five most intelligent motorists when split by car brand driven, all with average IQ scores of over 95. On the other end of the scale, our study found that BMW drivers generally had a lower IQ, with a score of 91.68, alongside Fiat (90.14) and Land Rover (88.58) drivers. However its worth remembering that worldwide average IQ sits between scores of 85 and 115, so all surveyed sat within that scale. Which fuel types correlate with high IQ scores? With the nation aiming to ban the sale of petrol and diesel cars in the next seven years, and more people switching to electric-powered vehicles, we also wanted to find out if the fuel type you choose could correlate with how high your IQ score is. While electric car drivers are doing their bit to help the environment and protect future generations (a smart move, we’re sure most would agree) – it’s petrol drivers who were found to be the most intelligent, according to our research. Petrol car drivers scored an average IQ score of 94.35, followed by hybrid (93.89) and diesel (92.91) drivers, while electric drivers placed in fourth position with an average score of 90.19 – 4.16 less than petrol drivers on average. Can the colour of your car be linked to how smart you are? Once you’ve decided on the model and fuel type of your chosen vehicle, the next thing to choose is the colour of your car. But could the colour you choose correlate with how smart you are? Our findings suggest this is the case, with those choosing a white car scoring on average 95.71 on the IQ test – a significant 7.28 more than those choosing green vehicles, who ranked with an average IQ score of 88.43… Personalised number plate owners are less intelligent, according to IQ scores Finally, we also investigated whether having a personalised number plate would correlate with how high drivers scored on the IQ tests. The personalised number plate industry was estimated to be worth a whopping £1.3 billion in 2022, with many drivers investing in customised plates either to use on their own vehicles, or as memorable keepsakes. Our study revealed though that those who own a personalised number plate are generally less intelligent than those without, with private plate owners scoring an average of 2.2 less on the IQ test, compared to those who don’t own one. Regardless of your IQ score, or the type of car you’re driving, ensure you’re staying safe on the roads to avoid your vehicle prematurely hitting the scrap heap. Methodology We partnered with Censuswide to survey 2,024 UK drivers and analyse the brand and colour of the car they currently drive, in addition to whether they owned a personalised number plate or not.27 car brands were researched in total however five brands were discounted due to there not being sufficient data. Each participant then undertook a twenty question IQ test inspired by https://www.arealme.com/iq/en/ giving each driver an equivalent IQ score. Average IQ scores were then calculated for car brands, car colours and types of number plate, to reveal which drivers are the smartest. Survey conducted in January 2023.

- 39 replies

-

- 13

-

.png)

-

- car brand

- iq of driver

-

(and 1 more)

Tagged with:

-

Facebook Removes Accounts With AI-Generated Profile Photos Facebook on Friday removed what it called a global network of more than 900 accounts, pages, and groups from its platform and Instagram that allegedly used deceptive practices to push pro-Trump narratives to about 55 million users. The network used fake accounts, artificial amplification, and, notably, profile photos of fake faces generated using artificial intelligence to spread polarizing, predominantly right-wing content around the web, including on Twitter and YouTube. Facebook’s investigation connected The BL to The Epoch Times, a conservative media organization with ties to Chinese spiritual group Falun Gong and a history of aggressive support for Donald Trump. Ostensibly a US-based media organization, The BL network’s pages were operated by users in Vietnam and the US, who Facebook says made widespread use of fake accounts to evade detection and funnel traffic to its own websites. It represents an alarming new development in the information wars, as it appears to be the first large-scale deployment of AI-generated images in a social network. https://www.wired.com/story/facebook-removes-accounts-ai-generated-photos/#intcid=recommendations_wired-right-rail-popular_b5c81ee4-f065-4e23-81be-216273db9d3f_popular4-1 In below, the left, admins for “Patriots for President Trump,” nine out of 15 of which used AI-generated faces, researchers said. On the right, admins for “President Trump KAG 2020," eight out of 16 of which are fake faces, according to a report...

- 17 replies

-

Do you think a persons intelligence level affects the way they drive? For example lorry drivers who are clearly uneducated some doesnt even have primary education drive badly ... And so are taxi drivers (most) We also see that intelligence level will determine the type of car one drives (mostly) eg a school dropout will likely drive a picanto than a BMW , I see many getz, vios an other small cheap car driving badly.. Is my hypothesis valid? I wish to hear your opinion

-

http://www.channelnewsasia.com/stories/sin.../337278/1/.html "SINGAPORE: The search for alleged JI leader of Singapore Mas Selamat Kastari will now become more targeted - based on specific intelligence on where he might be hiding. Deputy Prime Minister Wong Kan Seng on Wednesday said information showed the wanted man is still in Singapore. He gave this update on the manhunt efforts after meeting some 80 Gurkha soldiers involved in the search....." Honestly , after almost a month hidding in the forest ; this MSK must be either very well train in jungle survivor skills or his body already decompose somewhere in the reservoirs....